Introduction: This article is an overview of machine learning algorithms and a personal learning summary. By reading this article, you can quickly gain a clear understanding of machine learning algorithms. This article promises that there will not be any mathematical formulas and derivations, and it is suitable for easy reading after dinner. I hope that readers can get some useful things more comfortably.

Introduction

This article is an overview of machine learning algorithms and a personal learning summary. By reading this article, you can quickly gain a clear understanding of machine learning algorithms. This article promises that there will not be any mathematical formulas and derivations, and it is suitable for easy reading after dinner. I hope that readers can get some useful things more comfortably.

This article is mainly divided into three parts. The first

part is the introduction of anomaly detection algorithms. I personally feel

that such algorithms are very useful for monitoring systems. The second part is

an introduction to several common algorithms in machine learning. The third

part is in-depth An introduction to learning and reinforcement learning.

Finally, there will be a summary of myself.

1. Anomaly Detection Algorithm

Anomaly detection, as the name suggests, is an algorithm for detecting anomalies, such as abnormal network quality, abnormal user access behavior, server abnormality, switch abnormality, and system abnormality. For monitoring, I will use references, so I will introduce the content of this part separately first.

Anomalies are defined as "more likely to be

separated"—points that are sparsely distributed and far from densely

populated groups. Using statistics to explain, in the data space, areas with

sparse distribution indicate that the probability of data occurring in this

area is very low, so it can be considered that the data falling in these areas

is abnormal.

|

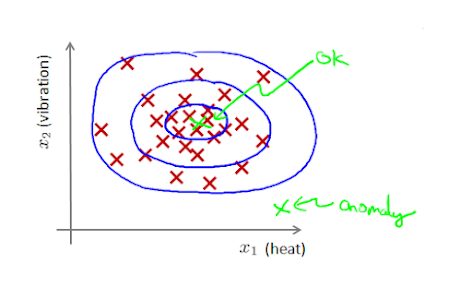

| Figure 1.1 Outliers appear to be far from normal points with high density |

As shown in Figure 1.1, the data in the blue circle is more likely to belong to this group of data, and the more remote the data, the lower the probability of belonging to this group of data.

The following is a brief introduction to several anomaly

detection algorithms:

1.1 Distance-Based Anomaly Detection Algorithm

|

Figure 1.2 Distance-based anomaly detection |

Thought: If there are not many friends around a point, then it can be considered as an abnormal point.

Steps: Given a radius r, calculate the ratio of the number

of points in the circle with the current point as the center and the radius r

to the total number. If the ratio is less than a threshold, then it can be

considered an outlier.

1.2 Depth-Based Anomaly Detection Algorithm

|

Figure 1.3 Depth-based anomaly detection algorithm |

Idea: Outliers are far from dense groups and are often at the very edge of the group.

Steps: By connecting the points of the outermost layer, and

indicating that the depth value of this layer is 1; then connecting the points

of the next outer layer, indicating that the depth value of this layer is 2,

and repeating the above actions. Points with depth values less than a certain

value k can be considered outliers because they are the points farthest from

the central population.

1.3 Distribution-Based Anomaly Detection Algorithm

|

Figure 1.4 Gaussian distribution |

Idea: When the current data point deviates from the average of the overall data by 3 standard deviations, it can be regarded as an abnormal point (how many standard deviations can be adjusted according to the actual situation).

Steps: Calculate the mean and standard deviation of the

existing data. When a new data point deviates from the mean by 3 standard

deviations, it is regarded as an outlier.

1.4 Division-based anomaly detection algorithm

|

| Figure 1.5 Isolated Deep Forest |

Idea: If the data is continuously divided by a certain attribute, abnormal points can usually be divided to one side very early, that is, they are isolated early. The normal point needs to be divided more times due to the large number of groups.

Steps: Construct multiple isolated trees in the following

ways: randomly select an attribute of the data at the current node, and

randomly select a value of the attribute, and divide all the data in the

current node into two left and right leaf nodes; if the depth of the leaf nodes

is small or There are still many data points in the leaf nodes, so continue the

above division. Outliers appear as an average of very low tree depths across

all isolated trees, as shown in red in Figure 1-5 for very low depth outliers.

2. Common Algorithms of Machine Learning

A brief introduction to several common algorithms in machine learning: k-nearest neighbors, k-means clustering, decision trees, naive Bayes classifiers, linear regression, logistic regression, hidden Markov models and support vector machines. If you encounter something that is not well spoken, it is recommended to skip it directly.

2.1 K Nearest Neighbors

|

| Figure 2.1 Two of the three closest points are red triangles, so the points to be determined should be red triangles |

Classification Problem. For the point to be judged, find several data points closest to it from the existing labeled data points, and determine the type of the to-be-judged point based on the majority principle of minority according to their label types.

2.2 k-Means Clustering

|

| Figure 2.2 Continue to iterate to complete the "clustering of objects" |

The goal of k-means clustering is to find a split that minimizes the sum of squared distances. Initialize k center points; calculate the distance of each data point from these center points by Euclidean distance or other distance calculation methods, identify the data points closest to a center point as the same class, and then start from the data points identified as the same class A new center point is obtained from the data points to replace the previous center point, and the above calculation process is repeated until the position of the center point converges and no longer changes.

2.3 Decision Tree

|

| Figure 2.3 Judging whether it is suitable to play today through a decision tree |

The expression form of decision tree is similar to if-else, except that when generating decision tree from data, information gain is needed to decide which attribute to use first for division. The advantage of decision trees is that they are expressive, and it is easy for people to understand how the conclusions were reached.

2.4 Naive Bayes Classifier

Naive Bayesian is a classification method based on Bayes' theorem and the assumption of conditional independence of features. The joint probability distribution is learned from the training data, and the posterior probability distribution is then obtained. (Sorry, I don't have a picture, and I don't post the formula, so let's just -_-)

2.5 Linear regression

|

| Figure 2.4 Fitting a straight line with the smallest sum of differences from the actual values of all data points |

It is to find the most suitable parameters a and b for the function f(x)=ax+b by substituting the existing data (x, y), so that the function can best express the mapping relationship between the input and output of the existing data, thus Predict the output corresponding to the future input.

2.6 Logistic Regression

|

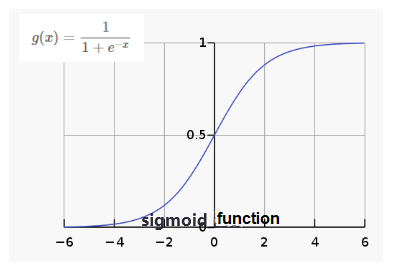

| Figure 2.5 Logical function |

The logistic regression model is actually just based on the above-mentioned linear regression, applying a logistic function to convert the output of the linear regression into a value between 0 and 1 through the logistic function, which is convenient to express the probability of belonging to a certain class.

2.7 Hidden Markov Model

|

| Figure 2.6 The transition probability between hidden states x and the probability map of the observation of state x as y |

Hidden Markov Model is a probability model about time series, which describes the process of randomly generating a sequence of unobservable states from a hidden Markov chain, and then randomly generating an observation from each state to generate a sequence of observations. The Hidden Markov Model has three elements and three basic problems, and those who are interested can learn about it separately. Recently, I read an interesting paper, in which Hidden Markov Model was used to predict at which stage American graduate students would change majors, so as to make countermeasures to retain students of a certain major. Will the company's human resources also use this model to predict at which stage employees will switch jobs, so as to implement the necessary measures to retain employees in advance? (^_^)

2.8 Support Vectors

|

| Figure 2.7 Support vector support for maximum interval |

A support vector machine is a binary classification model

whose basic model is a linear classifier with the largest interval defined on

the feature space. As shown in Figure 2.7, since the support vector plays a key

role in determining the separation hyperplane, this classification model is

called a support vector machine.

For the nonlinear classification problem in the input space, it can be transformed into a linear classification problem in a high-dimensional feature space through nonlinear transformation (kernel function), and a linear support vector machine can be learned in the high-dimensional feature space. As shown in Figure 2.8, the training points are mapped into a 3D space where the separating hyperplane can be easily found.

|

| Figure 2.8 Converting 2D linearly inseparable to 3D linearly separable |

3. Introduction to Deep Learning

Here is a brief introduction to the origin of neural

networks. The order of introduction is: perceptron, multilayer perceptron

(neural network), convolutional neural network and recurrent neural network.

3.1 Perceptron

|

| Figure 3.1 The input vector is entered into the activation function after weighted summation to obtain the result |

The neural network originated in the 1950s and 1960s, when it was called a perceptron, with an input layer, an output layer and a hidden layer. Its disadvantage is that it cannot represent slightly more complex functions, so there is a multi-layer perceptron to be introduced below.

3.2 Multilayer Perceptron

|

Figure 3.2 Multilayer perceptron, which is represented by

multiple hidden layers between input and output |

On the basis of the perceptron, multiple hidden layers are added to meet the ability to express more complex functions, which is called a multi-layer perceptron. In order to force it, it is named neural network. The more layers of the neural network, the stronger the performance, but it will lead to the disappearance of the gradient during BP backpropagation.

3.3 Convolutional Neural Networks

|

| Figure 3.3 General form of convolutional neural network |

The fully connected neural network has many hidden layers in the middle, which leads to the expansion of the number of parameters, and the fully connected method does not utilize local patterns (for example, adjacent pixels in the picture are related and can form more abstract features like eyes), so Convolutional neural networks appeared. The convolutional neural network limits the number of parameters and exploits the local structure, which is especially suitable for image recognition.

3.4 Recurrent Neural Networks

|

| Figure 3.4 A recurrent neural network can be seen as a neural network passing in time |

A recurrent neural network can be regarded as a neural

network passing in time, its depth is the length of time, and the output of

neurons can be used to process the next sample. Ordinary fully connected neural

networks and convolutional neural networks process samples independently, while

recurrent neural networks can deal with tasks that require learning

time-sequential samples, such as natural language processing and language

recognition.

4. Personal Summaries

Machine learning is actually learning the mapping from input to output:

That is, we hope to find out the laws in the data through a large amount of data. (In unsupervised learning, the main task is to find regularities in the data itself rather than mappings)

To summarize the general machine learning practice is: according to the applicable scenarios of the algorithm, select a suitable algorithm model, determine the objective function, select a suitable optimization algorithm, and approximate the optimal value through iteration to determine the parameters of the model.

Regarding the future prospects, some people say that reinforcement learning is the real hope of artificial intelligence. They hope to learn more about reinforcement learning and deepen their understanding of deep learning before they can read articles about deep reinforcement learning.

In the end, since I am only a novice who has been studying by myself for a few months, there are mistakes in the text.

References

[1] Li Hang. Statistical Learning Methods [J]. Tsinghua University Press, Beijing, 2012.

[2] Kriegel H P, Kröger P, Zimek A. Outlier detection techniques[J]. Tutorial at KDD, 2010.

[3] Liu F T, Ting K M, Zhou Z H. Isolation forest[C]//Data Mining, 2008. ICDM'08. Eighth IEEE International Conference on. IEEE, 2008: 413-422.

[4] Aulck L, Aras R, Li L, et al. Stem-ming the Tide: Predicting STEM attrition using student transcript data[J]. arXiv preprint arXiv:1708.09344, 2017.

[5] Li Hongyi.deep learning tutorial. http://speech.ee.ntu.edu.tw/~tlkagk/slide/Deep%20Learning%20Tutorial%20Complete%20(v3)

[6] Scientific Research Jun. Differences in the internal structure of convolutional neural networks, recurrent neural networks, and deep neural networks. https://www.zhihu.com/question/346811