Source: Snowball

Abstract: From an algorithmic point of view, machine learning has many kinds of algorithms, such as regression algorithms, instance-based algorithms, regularization algorithms, decision tree algorithms, Bayesian algorithms, aggregation algorithms, association rule learning algorithms, and artificial neural network algorithms.

From an algorithmic point of view, there are many kinds of

algorithms in machine learning, such as regression algorithms, instance-based

algorithms, regularization algorithms, decision tree algorithms, Bayesian

algorithms, aggregation algorithms, association rule learning algorithms, and

artificial neural network algorithms. Many algorithms can be applied to

different specific problems; many specific problems also require the application

of several different algorithms at the same time. Due to the limited space, we

will only introduce one of them (probably the most famous in the public mind):

artificial neural network.

Artificial Neural Networks:

Since artificial intelligence wants to simulate the thinking process of human beings, some artificial intelligence scientists think, why don't we first look at how humans think?

The human brain is a complex neural network. Its constituent units are neurons. Each neuron looks very simple, they first receive the electrical signal stimulation of the previous nerve cell, and then send the electrical signal stimulation to the next nerve cell.

Even though neurons are simple, if the number of neurons is large and they are connected to each other just right, it becomes a neural network, and complex intelligence can be derived from simplicity. For example, the human brain contains 100 billion neurons, and each neuron has an average of 7,000 synaptic connections with other neurons. About 1 trillion synapses are formed in the brain of a three-year-old. The number of synapses in the human brain gradually decreases with age. There are about 1 trillion to 5 trillion synapses in the adult brain.

Although scientists have not fully figured out how the

neural network of the human brain works, artificial intelligence scientists

think that it doesn't matter if they don't understand. First, try to simulate a

set of virtual neural networks in the computer. This is the artificial neural

network.

In an artificial neural network, each little circle is

simulating a "neuron". It can receive input signals (that is, a bunch

of numbers) from neurons in the previous layer; assign different weights

according to the importance of different neurons in its eyes, and then add up

the input signals according to their respective weights (a The heap number is

multiplied by the size of the weight, and then summed); then, it will add the

result to a function (usually a nonlinear function), perform the operation, and

get the final result; finally, it will output the result to the neural network.

the next layer of neurons.

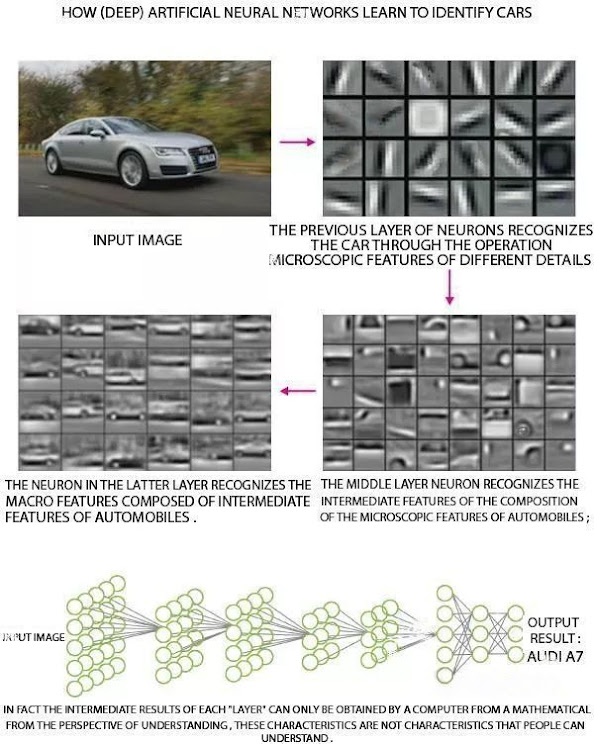

The neurons in the artificial neural network look very simple, they only know how to perform simple operations on the input data of the neurons in the previous layer, and then stupidly output. Unexpectedly, this set is really useful. After using a series of sophisticated algorithms and feeding it a large amount of data, the artificial neural network can actually be like the neural network of the human brain. characteristics", resulting in "intelligent thinking results".

So how do artificial neural networks learn? The so-called learning is essentially to let the artificial neural network try to adjust the weight of each neuron, so that the performance of the entire artificial neural network in the test of a certain task meets a certain requirement (for example, the correct rate of recognizing cars reaches 90%). %above).

Please recall the "gradient descent method"

mentioned earlier. Artificial neural networks try different weights, which is

equivalent to walking around on a map of parameter space. Each combination of

weights corresponds to the error rate of the artificial neural network when

performing the task, which is equivalent to each point on this map having an altitude.

Finding a set of weights such that the artificial neural network performs best

and has the lowest error rate is the equivalent of finding the lowest elevation

on a map. Therefore, the learning process of artificial neural network often

uses some kind of "gradient descent method", which is why if you want

to learn artificial intelligence in the future, the first thing to master is

"gradient descent method".

Classification of machine learning:

From a learning style perspective, there are many ways to learn machine learning, and we briefly list a few of them: supervised learning, unsupervised learning, reinforcement learning, and transfer learning.

Supervised Learning:

Let's say you want to teach a computer how to recognize if the animal in a photo is a cat. You first take out hundreds of thousands of pictures of animals, and if there is a cat, you tell the computer that there is a cat; if there is no cat, you tell the computer that there is no cat. That is, you pre-classify the data that the computer is to learn from. This is equivalent to you supervising the learning process of the computer.

After a process of supervised learning, if you show the

computer the photo again, it can recognize if there is a cat in the photo.

Unsupervised Learning:

Let's say you want to teach a computer to distinguish pictures of cats and dogs. You pull out hundreds of thousands of pictures of cats and dogs (and no other animals). You don't tell the computer which are cats and which are dogs. That is, you're not pre-classifying the data the computer is going to learn from, so you're not supervising the computer's learning process.

After a process of supervised learning, the computer can classify

your input photos into two broad categories based on similarity (that is,

distinguishing between cats and dogs). It's just that the computer is only

classifying from the perspective of the mathematical characteristics of digital

photos, not from the perspective of zoology.

Reinforcement Learning:

Let's say you want to teach a computer to control a robotic arm to play table tennis. In the beginning, the computer-controlled robotic arm acted like a fool, holding a racket and doing a lot of random movements, completely missing the point.

But once the robotic arm happens to catch a ball and hit it on the opponent's table, we let the computer score a point, which is called a reward. Once the robotic arm does not catch the ball correctly, or hit the ball in the correct position, we deduct a point from the computer, which is called a penalty. After a lot of training, the robotic arm gradually learned the basic movements of catching and hitting the ball from the rewards and punishments.

Transfer learning:

For example, after you teach a computer to control a robotic arm to play ping pong, then you tell it to learn to play tennis. At this time, you don't need to make the computer learn from scratch, because the rules of table tennis and tennis are similar. For example, both types of balls are required to hit the ball on the opponent's court/table. So, the computer can transfer the previously learned actions. Such learning is called transfer learning.

The Future Intelligence Laboratory is an interdisciplinary research institution of artificial intelligence, Internet and brain science jointly established by artificial intelligence scientists and relevant institutions of the Academy of Sciences.

The main work of the Future Intelligence Laboratory includes: establishing an AI intelligent system IQ evaluation system, and conducting a world artificial intelligence IQ evaluation; Smart level services for industries and cities.